Ett onlinespel för montering av hyllor, utvecklat som proof-of-concept. Kredit:University of Southern California

Allt eftersom robotar alltmer går samman för att arbeta med människor – från vårdhem till lager till fabriker – måste de kunna erbjuda proaktivt stöd. Men först måste robotar lära sig något vi vet instinktivt:hur man kan förutse människors behov.

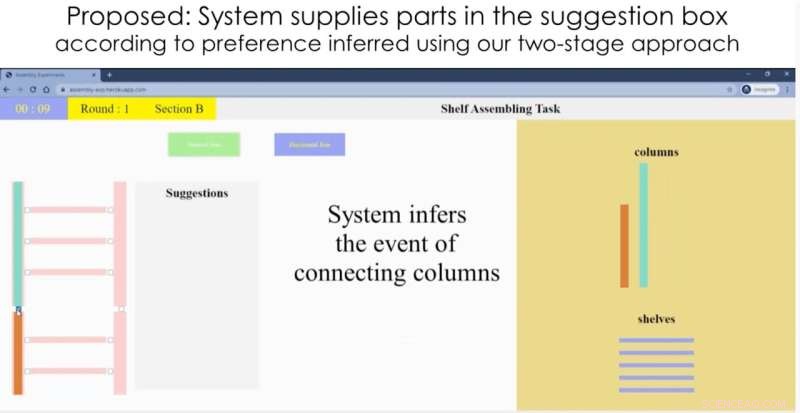

Med det målet i åtanke har forskare vid USC Viterbi School of Engineering skapat ett nytt robotsystem som exakt förutsäger hur en människa kommer att bygga en IKEA bokhylla, och sedan ger en hand - tillhandahåller hyllan, bulten eller skruven som behövs för att slutföra uppgiften . Forskningen presenterades vid den internationella konferensen om robotik och automation den 30 maj 2021.

"Vi vill att människan och roboten ska arbeta tillsammans - en robot kan hjälpa dig att göra saker snabbare och bättre genom att utföra stödjande uppgifter, som att hämta saker", säger studiens huvudförfattare Heramb Nemlekar. "Människor kommer fortfarande att utföra de primära åtgärderna, men kan överföra enklare sekundära åtgärder till roboten."

Nemlekar, en Ph.D. student i datavetenskap, handles av Stefanos Nikolaidis, en biträdande professor i datavetenskap, och skrev artikeln tillsammans med Nikolaidis och SK Gupta, en professor i rymd-, maskinteknik och datavetenskap som innehar Smith International Professorship in Mechanical Engineering.

Anpassning till variationer

2018 lärde sig en robot skapad av forskare i Singapore att montera en IKEA-stol själv. I den här nya studien vill USC:s forskargrupp istället fokusera på samarbete mellan människa och robot.

Det finns fördelar med att kombinera mänsklig intelligens och robotstyrka. I en fabrik kan en mänsklig operatör till exempel kontrollera och övervaka produktionen, medan roboten utför det fysiskt ansträngande arbetet. Människor är också mer skickliga på de där krångliga, känsliga uppgifterna, som att vicka runt en skruv för att få den att passa.

The key challenge to overcome:humans tend to perform actions in different orders. For instance, imagine you're building a bookcase—do you tackle the easy tasks first, or go straight for the difficult ones? How does the robot helper quickly adapt to variations in its human partners?

"Humans can verbally tell the robot what they need, but that's not efficient," said Nikolaidis. "We want the robot to be able to infer what the human wants, based on some prior knowledge."

It turns out, robots can gather knowledge much like we do as humans:by "watching" people, and seeing how they behave. While we all tackle tasks in different ways, people tend to cluster around a handful of dominant preferences. If the robot can learn these preferences, it has a head start on predicting what you might do next.

A good collaborator

Based on this knowledge, the team developed an algorithm that uses artificial intelligence to classify people into dominant "preference groups," or types, based on their actions. The robot was fed a kind of "manual" on humans:data gathered from an annotated video of 20 people assembling the bookcase. The researchers found people fell into four dominant preference groups.

In an IKEA furniture assembly task, a human stayed in a “work area” and performed the assembling actions, while the robot brought the required materials from storage area. Credit:University of Southern California

For instance, do you connect all the shelves to the frame on just one side first; or do you connect each shelf to the frame on both sides, before moving onto the next shelf? Depending on your preference category, the robot should bring you a new shelf, or a new set of screws. In a real-life IKEA furniture assembly task, a human stayed in a "work area" and assembled the bookcase, while the robot—a Kinova Gen 2 robot arm—learned the human's preferences, and brought the required materials from a storage area.

"The system very quickly associates a new user with a preference, with only a few actions," said Nemlekar.

"That's what we do as humans. If I want to work to work with you, I'm not going to start from zero. I'll watch what you do, and then infer from that what you might do next."

In this initial version, the researchers entered each action into the robotic system manually, but future iterations could learn by "watching" the human partner using computer vision. The team is also working on a new test-case:humans and robots working together to build—and then fly—a model airplane, a task requiring close attention to detail.

Refining the system is a step towards having "intuitive" helper robots in our daily lives, said Nikolaidis. Although the focus is currently on collaborative manufacturing, the same insights could be used to help people with disabilities, with applications including robot-assisted eating or meal prep.

"If we will soon have robots in our homes, in our work, in care facilities, it's important for robots to infer and adapt to people's preferences," said Nikolaidis. "The robot needs to be a teammate and a good collaborator. I think having some notion of user preference and being able to learn variability is what will make robots more accepted."